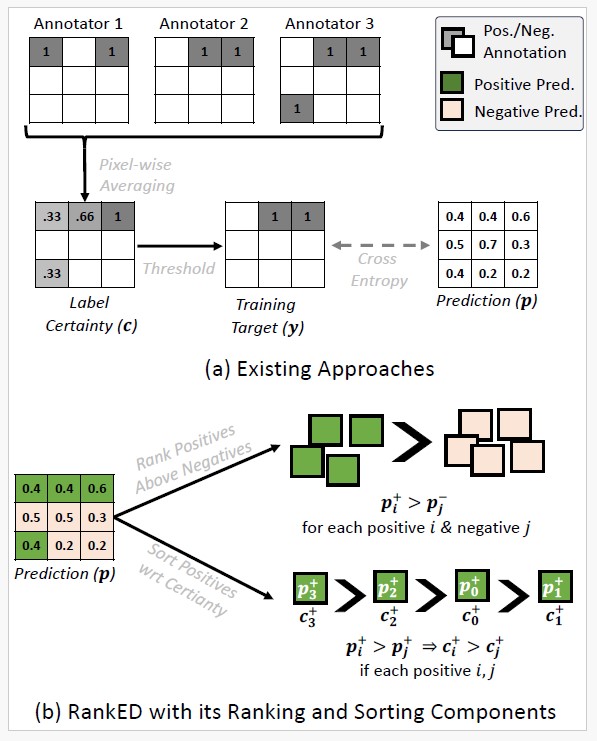

Overview

(a) Current approaches threshold label certainties and

class-balanced cross-entropy loss for training edge detectors.

(b) With RankED, we propose a unified approach which ranks

positives over negatives to handle the imbalance problem

and sorts positives with respect to their certainties.

Visual Results

GT

OURS

(AFTER NMS)

OURS

(AFTER NMS

+

Thinning)